From Prototype to Product: An Engineer's Playbook for Shipping LLMs

After two years of building with Large Language Models, I've seen a pattern emerge: teams are more rewarded for using AI vs driving measurable outcomes that happen to be LLM enhanced. It's easier than ever to build a shiny demo, but the path from that demo to a launched, impactful product remains as challenging as ever.

This isn't for lack of trying. The truth is that achieving high-quality,

production-ready results is hard. The current Cambrian explosion of AI-powered prototypes is exciting, but

how many will actually land?

This post is a battle-tested playbook for closing that gap. We'll walk through a

five-step framework for taking an LLM project from a clever idea to a production-grade feature that delivers

real-world value.

We will cover:

- Rapid Idea Validation: Confirming viability in hours, not weeks.

- Fast Development Loops: Building a developer workflow to test prompts and ideas in minutes.

- Robust Evals: Creating a testbed with good and bad evals to benchmark performance during your development loops.

- Prompt Debugging: Using "thinking tokens" to understand and fix your prompt's behavior.

- Code-vs-Prompt Balance: Knowing when to engineer your prompt further and when to use programmatic “tradition” solutions as part of the solution.

For the context of this overview we will use a project brief and apply the above playbook to in order to see how it works in practice:

|

CUJ: I want to automate code-reviews to take into account simple to catch

logical issues in the code such as adding new variables that are never used.

// existing code (new code) var a = “wow“ // existing code

Should be automatically flagged during review so that a human does not need to manually find these cases during code-review and instead they can focus on the business logic. |

1. Rapid idea validation

With our project brief, the first step is rapid validation. Ideas are cheap, but building is expensive. A quick 'proof of life' demo is the critical gate between abandoning an idea and moving forward.

Too often projects jump into the technical underpinning of setting up infra just to reach a point of proof of concept - infatuation with the technology has a way of losing the product within the technological-wanderlust, it is not uncommon to see someone spend _weeks_ learning the latest MCP/Agent/$TECH just to find out that the idea was a dead-end.

The first step is to create an example of a case we want to be able to catch

Using https://github.com/webpack/tooling/pull/25 as a base we can update the URL by appending .patch to generate a patch payload And modify the patch slightly to insert this dead-code example

|

From 59ff615d0408c68ce2874c1f571445bdb5082c2a Mon Sep 17 00:00:00 2001 From: alexander-akait <[email protected]> Date: Thu, 10 Jul 2025 18:19:59 +0300 Subject: [PATCH] fix: handle more types with `NodeJS` prefix

--- generate-types/index.js | 7 +++++++ 1 file changed, 7 insertions(+)

diff --git a/generate-types/index.js b/generate-types/index.js index 8251254..f211043 100644 --- a/generate-types/index.js +++ b/generate-types/index.js @@ -841,6 +841,13 @@ const printError = (diagnostic) => { + let a = “checkerState” /** @type {ts.TypeNode} */ let typeNode = /** @type {any} */ (type)._typeNode; if (type.aliasSymbol) { + const fullEscapedName = getFullEscapedName(type.aliasSymbol); + if (fullEscapedName.includes("NodeJS.")) { + return { + type: "primitive", + name: fullEscapedName, + }; + } const aliasType = checker.getDeclaredTypeOfSymbol(type.aliasSymbol); if (aliasType && aliasType !== type) { const typeArguments = type.aliasTypeArguments || [];

|

Now, instead of setting up a complex development environment, we can head straight to a tool like Google's AI Studio. We can paste our patch file directly into the prompt and see if we can get a result in seconds.

Prompt:

Given the following DIFF, identify

code which given the diff looks to be unused, report on the lines - do not explain your work

$DIFF_HERE

Result:

- let a = “checkerState”

Immediately we have validated that

(with a simple size of 1) this ideapotentially is viable and as such it may make sense to advance

towards the next level of project vetting.

2. Establishing a fast development loop

A successful proof of concept is key, but to develop it into a real feature, you need a fast development loop—one measured in minutes, not hours. It must be trivial to tweak a prompt and see the result quickly. It needs to be trivial and normal to tweak your prompt or underlying tech to see the side-effect as fast as possible, while normally this seems obvious, the glare of the new (LLM) tech does cause people to lose sight of this basic requirement for normal software development practices and in the process of ramping up to “real” results in engineers losing the ability to play in the sandbox. This results in iteration slowing to a deploy / soak cycle as compared to a rapid local development loop.

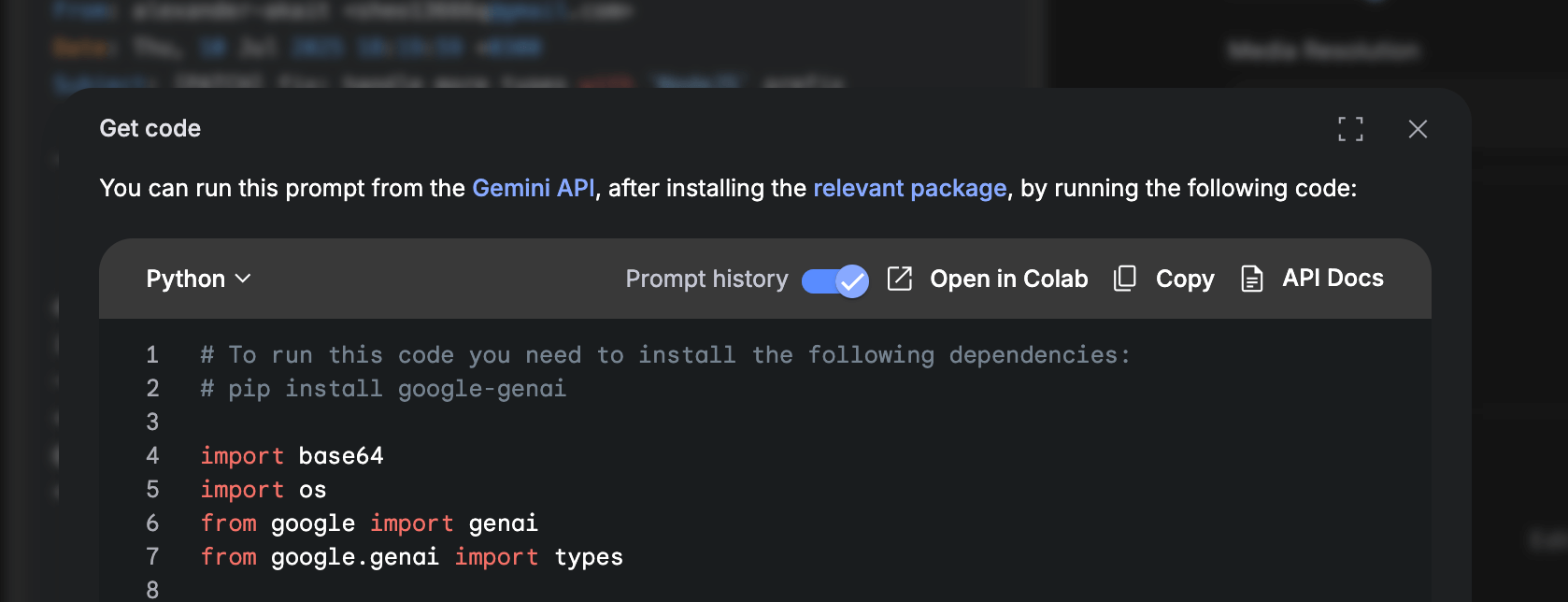

Luckily aistudio makes it easy to go from our initial validation prompt and import it into a colab notebook meaning we have a way to programmatically run our workflow[c] without having to go to a UI every time and copy and paste. This is critical to enable scaling out our ability to iterate on the prompt and evaluate the systems performance.

This programmatic workflow is non-negotiable. Friction or omission on this step is what kills LLM powered product iteration and separates existence from excellence.

3. Building your Test Bed: TDD for LLMs

Once you have a fast development loop, you need to know if you're making progress. The best way to do this is to build an evaluation set. Think of it as Test-Driven Development (TDD) for LLMs. It isn't scary; it's the single practice that separates mediocre results from excellent ones. In my experience, no successful LLM powered project exists without some form of offline evaluation.

A great source for real-world test cases is mining existing data covering the user space you're trying to operate in. For our "unused code" agent, we can use the GitHub archive on BigQuery to find real code reviews where humans flagged exactly this issue.

|

SELECT repo.name, actor.login as user, JSON_EXTRACT_SCALAR(payload, '$.comment.body') AS comment_body, JSON_EXTRACT_SCALAR(payload, '$.comment.html_url') AS comment_url FROM `githubarchive.day.202506*` WHERE type = 'CommitCommentEvent' # remove bots AND actor.login not like "%[bot]%" # filter out markdown AND JSON_EXTRACT_SCALAR(payload, '$.comment.body') not like "%#%" AND JSON_EXTRACT_SCALAR(payload, '$.comment.body') not like "%<details%" AND LOWER(JSON_EXTRACT_SCALAR(payload, '$.comment.body')) LIKE '%unused%' limit 100; |

To keep this example focused, we'll select three real-world commits identified by our query. We'll use these as our initial test bench, complete with the "ground truth" of what a human reviewer found (1,2,3).

From these URLs we then can turn them into a simple evaluation case that we can use programmatically from our notebook to determine how well our agent is performing.

|

urls = [ dict( url="https://github.com/codepath/site-unit2-project1-music-playlist-explorer-starter/commit/fb6d805d55b5774f3f71ae96c62fd0550cb06ea7.patch", human_found_miss=""" music-playlist-creator/script.js - line 2 """ ), dict( url='https://github.com/aws-samples/synthetics-canary-local-debugging-sample/commit/c772cfda34fa5d582b52f02fe439a47df6fc7c96.patch', human_found_miss=""" MonitoringAppGeneric.java - line 17 """ ), dict( url='https://github.com/nmap/nmap/commit/671b6490bf27253be759dfc979d20f4622414b7e.patch', human_found_miss=""" scripts/multicast-profinet-discovery.nse - line 113 scripts/multicast-profinet-discovery.nse - line 118 """ ) ] |

Running our initial, simple prompt against these three test cases was immediately illuminating. It failed on most of them, but more importantly, it showed us why:

- It was getting confused by non-code files.

- It was flagging code that existed before the patch, not just newly added code.

- Its output format was inconsistent and hard to parse.

This is the power of an eval set. It turns a vague sense of "it's not working" into a concrete list of problems and examples to solve. Armed with this knowledge, we evolved the prompt from this:

|

"""Given the following DIFF, identify code which given the diff looks to be unused, report on the lines - do not explain your work

```{diff}```""") |

To

|

Given the following DIFF, identify newly added code which given the diff are unused. Unused typically is indicated by the newly added variables or functions not being referenced or used in the given patch.

SKIP config files, and package dependency files and focus on only CODE changes for the report.

Report on the lines in the following format:

# Unused code report

- file name: `my-file/name.js` - unused lines: - `event.stopPropagation` line 45

Do not explain your work, only report very high confidence findings on code. |

With these targeted changes, our success rate on the test set jumped from 20% to 100% recall. We now had a reliable signal to guide our work. But while we could see what was happening, we still couldn't see why. That brings us to debugging the prompt itself.

4. From Black Box to Debugger: Using Thinking Tokens

Prompt engineering can at times feel like a black-box to try and convince (or threaten) the LLM to do what you want. Up until very recently with the exposure of thinking tokens debugging the performance of the prompt felt more magic than science with literal books written on the topics. With the exposure of thinking budgets and thinking tokens from LLM providers we can all of a sudden pull back the covers on exactly what is going on from the prompt + context to arrive at the final generated tokens.

The trick is to turn them on (gemini, openAI, claude) and then inspect the thoughts to figure out the current behavior and how the prompt. In many cases the model will tell you where it is spending time and often it will think outloud about how it is struggling with some of the constraints.

Let's look at one of our test cases that was still producing a noisy output, flagging unused imports:

|

# Unused code report - file name: `java-canary/src/main/java/org/example /monitoring/MonitoringAppGeneric.java` - unused lines: - `int status=con.getResponseCode()` line 18 - file name: `java-canary/src/main/java/org/example /monitoring/MonitoringAppWithSteps.java` - unused lines: - `import software.amazon.synthetics.StepConfiguration` line 9 - `import software.amazon.synthetics.StepOptions` line 10 - `return;` line 17

|

This isn't quite right; we don't care about unused imports. But why did the model include them? Let's look at its thoughts:

Thoughts:

|

**Pinpointing Unused Code**

I've begun dissecting the diff, zeroing in on new additions. My approach focuses on specific file types, excluding configuration files. Currently, I'm parsing the file tree to extract relevant new code. Next, I'll filter out the obvious non-code content and then concentrate on further analysis of the remaining additions.

**Examining New Java Files**

I'm now focusing on the Java code. I've pinpointed the `MonitoringAppGeneric.java` file and started analyzing its contents. I've noted the package, imports, and method declarations within the file. My next step involves scrutinizing the usage of the imports and the `handler()` method within the file's scope.

**Investigating Potential Unused Code**

I've carefully examined the `MonitoringAppGeneric.java` file. It appears the `status` variable, obtained from the connection's response code, isn't being utilized. Also, the import `software.amazon.synthetics.StepConfiguration` in `MonitoringAppWithSteps.java` is unused. Both of these are strong candidates for unused code. I'm focusing on confirming these findings and identifying their impact, if any.

**Examining Potential Dead Code**

After a deeper dive, I've confirmed my initial observation. In `MonitoringAppGeneric.java`, the variable `status` is indeed assigned a value but remains unused within the scope. Similarly, the imports `StepConfiguration` and `StepOptions` are not used in `MonitoringAppWithSteps.java`. I'm now assessing how these unused elements impact overall functionality and exploring their potential for removal.

**Pinpointing Unused Elements**

I've examined the code for potential unused elements. Specifically, in `MonitoringAppGeneric.java`, the variable `status` is assigned a value but isn't subsequently used. Similarly, in `MonitoringAppWithSteps.java`, the imports `StepConfiguration` and `StepOptions` are not referenced. I'm focused on confirming their redundancy and assessing implications. |

The answer is right there in plain sight. In its thoughts, the model explicitly states it is "Investigating Potential Unused Code" and then mentions scrutinizing "the usage of the imports." It's doing exactly what it thinks we want, but its interpretation is too broad.

The model told us where it was getting confused. The fix becomes obvious: add a line to the prompt telling it to ignore imports.

|

SKIP reporting on unused imports, and focus on variable declarations. |

After adding this line and re-running, the Thoughts confirm the new behavior and the final output is clean. This technique transforms prompt engineering from a frustrating guessing game into a systematic debugging process. You're no longer guessing; you're reading the stack trace.

5. Knowing when to Code

The magic of LLMs is infatuating. It makes you believe you can solve any problem with a clever enough prompt. This is a trap. In my experience, the most common failure mode for new LLM projects is trying to force the model to do everything.

LLMs are powerful reasoning engines, but they are also non-deterministic and can be unreliable at precise, rule-based tasks. The most effective solutions treat the LLM as one powerful component in a larger system, not as the entire system itself.

Let's look at our code review agent one last time. We were struggling to make the prompt ignore lines in the diff that weren't additions. We could have spent hours trying to engineer the perfect instruction to "only pay attention to lines starting with '+'", but there's a better way.

A few lines of Python can pre-process the input with perfect accuracy:

|

for url_dict in urls: if 'payload' in url_dict: lines = url_dict['payload'].splitlines() filtered_lines = [line for line in lines if line.strip().startswith('+') or line.strip().startswith('-')] url_dict['payload'] = '\n'.join(filtered_lines) |

This simple pre-processing step is cheaper, faster, and 100% reliable. It removes noise from the LLM's input, allowing the prompt to focus on its core competency: reasoning about the semantic meaning of the added code, not parsing diff syntax.

This leads to a critical heuristic for building with LLMs: Use code for deterministic tasks; use the LLM for reasoning tasks.

Before you try to solve a problem in your prompt, ask yourself:

- Can this be done with a simple rule, filter, or regex? If yes, use code.

- Does this require understanding context, intent, or ambiguity? If yes, use the LLM.

Letting each component do what it does best is the key to moving from a fragile demo to a robust, production-ready system. Don't let the magic of the LLM blind you to the power of well-applied traditional code.

Putting It All Together

So, how do we reliably get from a promising idea to a product that drives outcomes? We stop treating the LLM as the entire solution and start treating it as a powerful component in our engineering toolbox.

The journey we followed shows that the principles of good software development haven't changed. We simply need to adapt them:

- Validate ideas first, because your time is valuable.

- Build a fast development loop, because iteration speed is everything.

- Measure performance with a robust evaluation set (TDD for LLMs).

- Debug the model's logic by reading its thoughts.

- Architect a hybrid system that uses code and LLM to solve what each one is suited for.

The goal isn't to be a "prompt engineer"—it's to be a great software engineer who effectively leverages LLMs. Stop chasing magic. Start engineering.

Sam Saccone @samccone